| INSPIRE: An Interactive

Image Assisted Non-Photorealistic Rendering System |

||

|---|---|---|

|

The

11th Pacific Conference on Computer Graphics and Applications (PG'03),

Canmore, Canada, October 8-10 2003

|

||

| Two prominent issues in non-photorealistic

rendering (NPR) are extracting feature points for placing strokes and maintaining

frame-to-frame coherence and density of these feature points. Most of the

existing NPR systems address these two issues by directly operating on objects,

which can not only be expensive, but also dependent on representation. We present an interactive non-photorealistic rendering system, INSPIRE, which performs feature extraction in both image space, on intermediately rendered images, and object space, on models of various representations, e.g., point, polygon, or hybrid models, without needing connectivity information. INSPIRE performs a two-step rendering process. The first step resembles traditional rendering with slight modifications, and is often more efficient to render after the extraction of feature points and their 3D properties in image and/or object space. The second step renders only these feature points by either directly drawing simple primitives, or additionally performing texture mapping to obtain different NPR styles. In the second step, strategies are developed to promise frame-toframe coherence in animation. Because of the small computational overheads and the success of performing all the operations in vertex and pixel shaders using popularly available programmable graphics hardware, INSPIRE obtains interactive NPR rendering with most styles of existing NPR systems, but offers more flexibility on model representations and compromises little on rendering speed. |

|

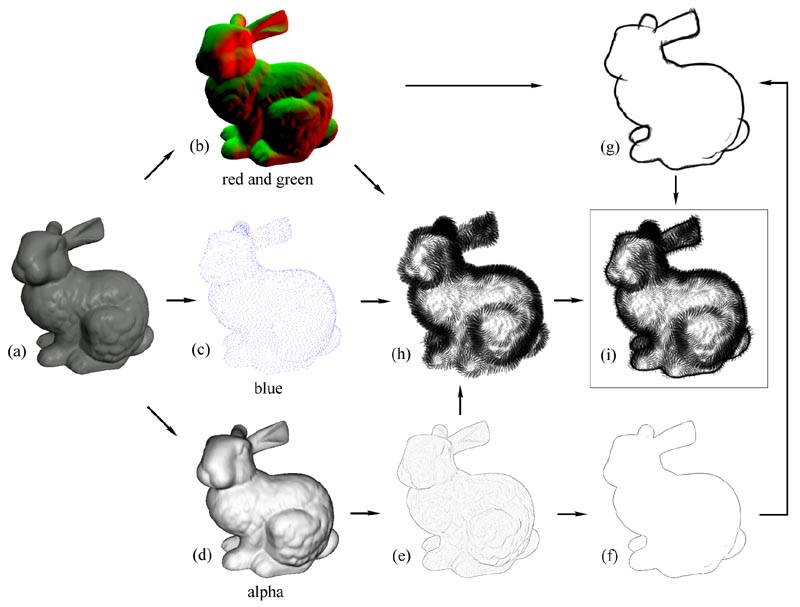

The general rendering pipeline includes three steps

of rendering. Step 1: the object (a) is rendered by encoding different

information in different frame buffer channels: (b) red and green channels store eye space normals; (c) blue channel encodes projected seed points (and optionally their shading intensities); (d) alpha channel stores the dot-product between surface normals and viewing directions. Step 2: image filtering is performed on alpha channel (d) to obtain edge points (e), which is further classified into strong (f) and weak edge points. Step 3: rendering strokes at edge points. The strong edge points are rendered using strokes of one style (g) while the weak edge points are rendered using strokes of another style (h). All strokes are oriented based on their corresponding normals in (b). The final image (i) is a combination of image (g) and (h). |

| @InProceedings{inspire:2003, author = {Minh X. Nguyen and Hui Xu and Xiaoru Yuan and Baoquan Chen}, title = {INSPIRE: An Interactive Image Assisted Non-Photorealistic Rendering System}, booktitle = {Proceedings of the 11th Pacific Conference on Computer Graphics and Applications}, year = {2003}, isbn = {0-7695-2028-6}, pages = {472--477}, publisher = {IEEE Computer Society}, } |

||

| Minh X. Nguyen, Hui Xu, Xiaoru Yuan

and Baoquan Chen, "INSPIRE: An Interactive Image Assisted Non-Photorealistic

Rendering System." In Proceedings of the 11th Pacific Conference

on Computer Graphics and Applications (PG'03), pages 472-477. Canmore, Canada,

October 8-10 2003. Download:| paper | pdf 1.45MB | longer version pdf 2.10MB | |