Description

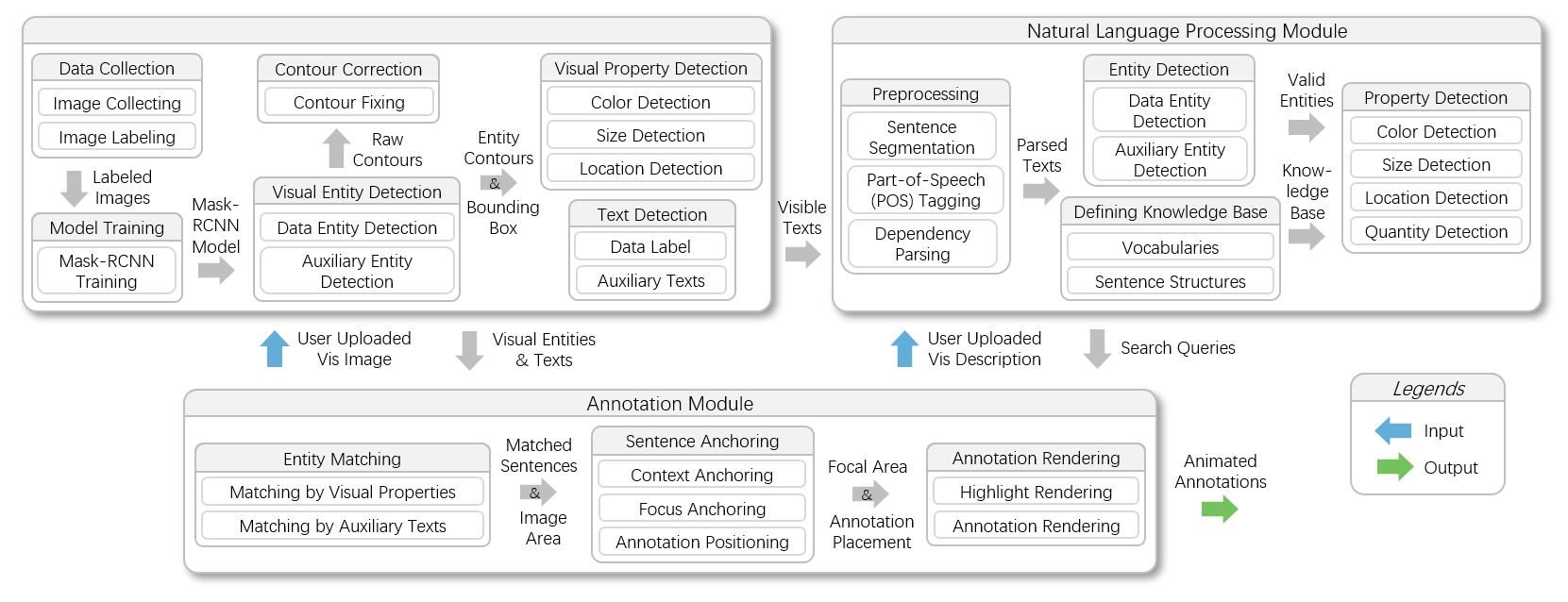

We propose a technique for automatically annotating visualizations according to the textual description. In our approach, visual elements in the target visualization, along with their visual properties, are identified and extracted with a Mask R-CNN model. Meanwhile, the description is parsed to generate visual search requests. Based on the identification results and search requests, each descriptive sentence is displayed beside the described focal areas as annotations. Different sentences are presented in various scenes of the generated animation to promote a vivid step-by-step presentation. With a user-customized style, the animation can guide the audience’s attention via proper highlighting such as emphasizing specific features or isolating part of the data. We demonstrate the utility and usability of our method through a user study with various use cases.

Citation

Chufan Lai, Zhixian Lin, Ruike Jiang, Yun Han, Can Liu, and Xiaoru Yuan.

Automatic Annotation Synchronizing with Textual Description for Visualization.

In Proceedings of ACM Conference on Human Factors in Computing Systems (CHI 2020), Honolulu, Hawai'i, USA. April 25-30, 2020.

Images

Annotation Pipeline

Annotation Example